This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Privacy Overview

Strictly Necessary Cookies

Show details

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

| Name | Provider | Purpose | Expiration |

|---|---|---|---|

| moove_gdpr_popup | Honda | Storing of user's consent status for cookies on the current domain. | 7 days |

Privacy Policy

More information about our Privacy Policy

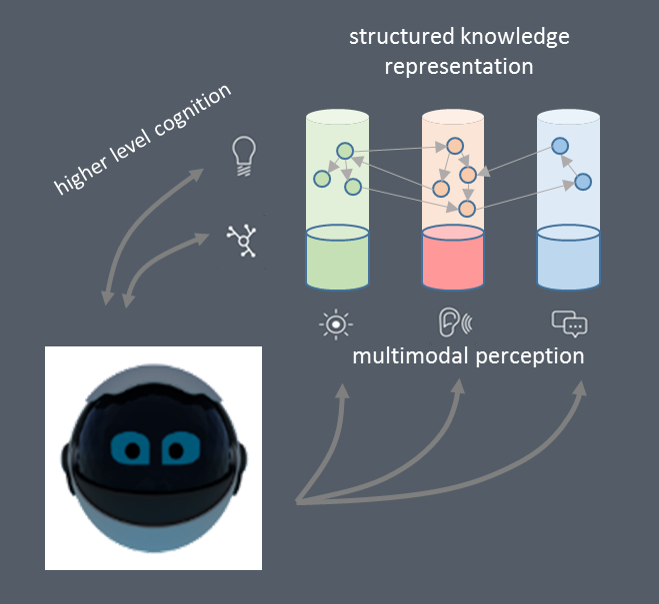

Perception is a system’s ability to receive and evaluate useful information about its environment. It comprises manifold capabilities such as detection, recognition, tracking and state estimation based on sensory measurements.

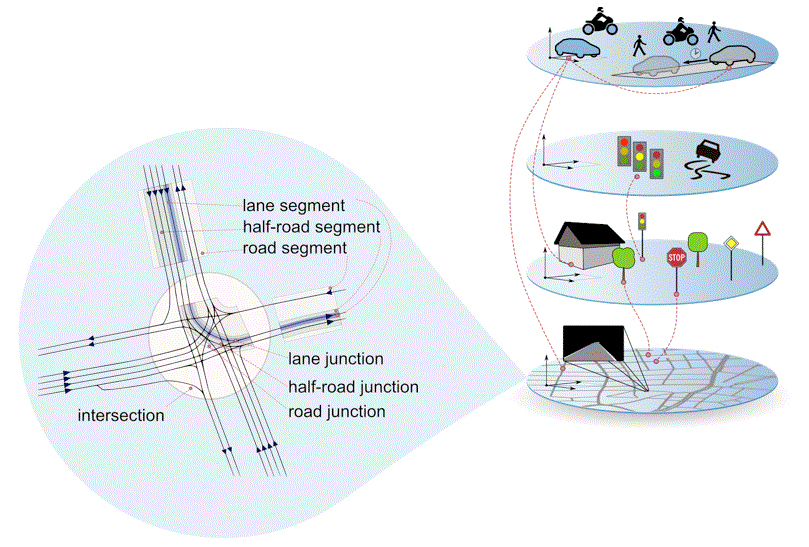

Perception is a system’s ability to receive and evaluate useful information about its environment. It comprises manifold capabilities such as detection, recognition, tracking and state estimation based on sensory measurements. Localization on maps at lane-level accuracy is a key capability for future autonomous driving systems. It enables intelligent vehicles to better understand their environment, to predict future trajectories of other traffic participants and allows for an improved evaluation of behavior options.

Localization on maps at lane-level accuracy is a key capability for future autonomous driving systems. It enables intelligent vehicles to better understand their environment, to predict future trajectories of other traffic participants and allows for an improved evaluation of behavior options.