go back

Ethics in AI

When Soichiro Honda established the Honda Technical Research Institute and later the Honda Motor Co. Ltd. after the 2nd world war, he was well aware of the responsibility of engineers and entrepreneurs for society. Since these early days Honda’s philosophy is inseparable from the principle to be a company that “people and society want to exist”. Researching new, possibly disruptive technologies for intelligent and more and more autonomous systems, at HRI we feel that it is our responsibility to consider how artificial intelligence might affect each one of us in our future lifes.

When Soichiro Honda established the Honda Technical Research Institute and later the Honda Motor Co. Ltd. after the 2nd world war, he was well aware of the responsibility of engineers and entrepreneurs for society. Since these early days Honda’s philosophy is inseparable from the principle to be a company that “people and society want to exist”. Researching new, possibly disruptive technologies for intelligent and more and more autonomous systems, at HRI we feel that it is our responsibility to consider how artificial intelligence might affect each one of us in our future lifes.

Cooperative Intelligence will maintain and enhance individual capabilities and create a new hybrid community of people and machines. If we create systems that are able to evolve and learn, their future behavior cannot be predicted anymore by the human designer. A freedom of behavioural choice that can be seen as a prerequisite for artificial intelligence needs an ethical framework that ensures that the new hybrid society will uphold basic human values. Researching the individual and social implications of the hybrid society has to be part of our activities. In order to establish machine ethics we need to share values for which we need to share experience instead of merely interacting with machines. Together with researchers from universities we want to contribute to the necessary public dialogue about the ethical considerations of these new technologies.

The approach of Cooperative Intelligence considers ethical Artificial Intelligence as a prerequisite for confidence and trust between human and machine. While most of the current research on ethics and technology concentrates on applying the ethical principle of preventing harm, at HRI-EU we focus on the aspects of fairness, mutual respect and explainability.

The approach of Cooperative Intelligence considers ethical Artificial Intelligence as a prerequisite for confidence and trust between human and machine. While most of the current research on ethics and technology concentrates on applying the ethical principle of preventing harm, at HRI-EU we focus on the aspects of fairness, mutual respect and explainability.

Integrating ethical principles into Artificial Intelligence reasoning, gives the autonomous machine a human- like value framework for Decision Making and behavior.

HRI-EU develops Privacy by Design concepts to preserve privacy for future personal data-driven Artificial Intelligence applications like assistance robotics. User interfaces which provide meaningful explanations of Artificial Intelligence behavior are conceptualised and tested in smart home environments. Autonomous vehicles are advanced by fairness considerations during selection and execution of behavior.

The user perceives these resulting systems to act more naturally and understandably – a human-centered Artificial Intelligence.

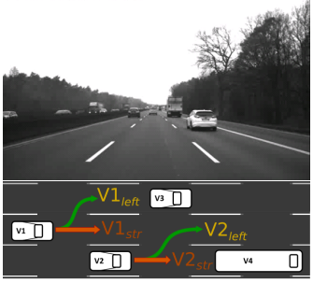

Applied Distributive Justice for Autonomous Driving

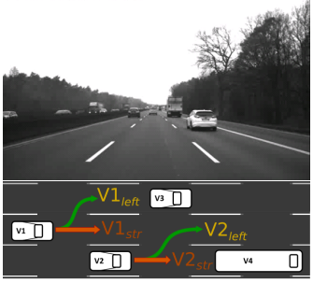

With Autonomous Vehicles (AVs), a new kind of traffic participant with Artificial Intelligence enters the shared public space. Taking ethical principles in any driving situation into consideration is highly relevant as every driving decision impacts the behavior option space of others. In our research, we propose to add a distributive justice model as framework for the AV‘s decision process. This enables the future vehicle to operate ethically towards other traffic participants.

With Autonomous Vehicles (AVs), a new kind of traffic participant with Artificial Intelligence enters the shared public space. Taking ethical principles in any driving situation into consideration is highly relevant as every driving decision impacts the behavior option space of others. In our research, we propose to add a distributive justice model as framework for the AV‘s decision process. This enables the future vehicle to operate ethically towards other traffic participants.

The developed AV behavioral architecture understands itself as being part of a system with the other traffic participants. It systematically takes into account the perspective of all traffic participants – others and its own. When predicting the driving options, the AV also considers the impact on each other. Based on safety, utility and comfort needs, each behavior option gets a calculated cost assigned. The behavior planning module compares all available options and decides on an action according to a just distribution of the costs over the whole system.

The addition of a distributive justice model aims to make road usage for human drivers and autonomous vehicles in a shared space a just experience.

For more information

For more information

M. Dietrich and T. Weisswange, “Distributive Justice as an Ethical Principle for Autonomous Vehicle Behavior Beyond Hazard Scenarios”, Ethics and Information Technology, 2019

When Soichiro Honda established the Honda Technical Research Institute and later the Honda Motor Co. Ltd. after the 2nd world war, he was well aware of the responsibility of engineers and entrepreneurs for society. Since these early days Honda’s philosophy is inseparable from the principle to be a company that “people and society want to exist”. Researching new, possibly disruptive technologies for intelligent and more and more autonomous systems, at HRI we feel that it is our responsibility to consider how artificial intelligence might affect each one of us in our future lifes.

When Soichiro Honda established the Honda Technical Research Institute and later the Honda Motor Co. Ltd. after the 2nd world war, he was well aware of the responsibility of engineers and entrepreneurs for society. Since these early days Honda’s philosophy is inseparable from the principle to be a company that “people and society want to exist”. Researching new, possibly disruptive technologies for intelligent and more and more autonomous systems, at HRI we feel that it is our responsibility to consider how artificial intelligence might affect each one of us in our future lifes. The approach of Cooperative Intelligence considers ethical Artificial Intelligence as a prerequisite for confidence and trust between human and machine. While most of the current research on ethics and technology concentrates on applying the ethical principle of preventing harm, at HRI-EU we focus on the aspects of fairness, mutual respect and explainability.

The approach of Cooperative Intelligence considers ethical Artificial Intelligence as a prerequisite for confidence and trust between human and machine. While most of the current research on ethics and technology concentrates on applying the ethical principle of preventing harm, at HRI-EU we focus on the aspects of fairness, mutual respect and explainability. With Autonomous Vehicles (AVs), a new kind of traffic participant with Artificial Intelligence enters the shared public space. Taking ethical principles in any driving situation into consideration is highly relevant as every driving decision impacts the behavior option space of others. In our research, we propose to add a distributive justice model as framework for the AV‘s decision process. This enables the future vehicle to operate ethically towards other traffic participants.

With Autonomous Vehicles (AVs), a new kind of traffic participant with Artificial Intelligence enters the shared public space. Taking ethical principles in any driving situation into consideration is highly relevant as every driving decision impacts the behavior option space of others. In our research, we propose to add a distributive justice model as framework for the AV‘s decision process. This enables the future vehicle to operate ethically towards other traffic participants.